PermalinkIntroduction

According to Nginx Documentation

Load balancing refers to efficiently distributing incoming network traffic across a group of backend servers, also known as a server farm or server pool.

Modern high‑traffic websites must serve hundreds of thousands, if not millions, of concurrent requests from users or clients and return the correct text, images, video, or application data, all in a fast and reliable manner. To cost‑effectively scale to meet these high volumes, modern computing best practice generally requires adding more servers.

A load balancer acts as the “traffic cop” sitting in front of your servers and routing client requests across all servers capable of fulfilling those requests in a manner that maximizes speed and capacity utilization and ensures that no one server is overworked, which could degrade performance. If a single server goes down, the load balancer redirects traffic to the remaining online servers. When a new server is added to the server group, the load balancer automatically starts to send requests to it.

In this manner, a load balancer performs the following functions:

- Distributes client requests or network load efficiently across multiple servers

- Ensures high availability and reliability by sending requests only to servers that are online

- Provides the flexibility to add or subtract servers as demand dictates

PermalinkSetting up Nginx

PermalinkInstalling Nginx

apt install nginx

#check the status of nginx

systemctl status nginx

#start it if it's not started

systemctl start nginx

#check whether the nginx service is enabled of not if not enable it

systemctl enable nginx

PermalinkConfiguring Nginx as Load Balancer

Here we are making some assumptions. Let’s assume the IP addresses of the servers on which our application is running is 1.1.1.1 and 2.2.2.2 and application is running on port 3000 and the domain is loadbalancer.com

vi /etc/nginx/sites-available/loadbalancer.com.conf

upstream loadbalancer {

server 1.1.1.1:3000;

server 2.2.2.2:3000;

}

server{

listen 80;

server_name loadbalancer.com;

location / {

proxy_pass "http://loadbalancer";

}

}

Points to understand in the above config file -

- Here we have defined a

upstreamblock that stores all the load balancer configuration such as load balancing method and server details. - In the server block under location we are just calling the previously defined

upstreamblock as proxy_pass

After that we have to copy the virtual-host configuration to sites-enabled directory

cp /etc/nginx/sites-available/loadbalancer.com.conf /etc/nginx/sites-enabled/loadbalancer.com.conf

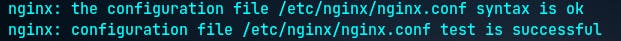

Test the nginx configuration

nginx -t

If configuration is correct the you will get something like below -

Reload nginx

nginx -s reload

And that's it we have successfully setup Nginx as load balancer. 🎉

PermalinkLet’s Deep dive and understand different load balancing methods

PermalinkMethod 1 - Round Robin -

In this method the load is balanced evenly by taking the server weight into consideration. In upstream block of the virtual host file we don’t have to mention the round robin method explicitly. It is enabled but default.

upstream loadbalancer{

server 1.1.1.1;

server 2.2.2.2;

}

Here in the above example the weight is not defined explicitly that means the requests will be evenly distributed. But we we have to assign weight to each server then we can make the following changes.

upstream loadbalancer{

server 1.1.1.1 weight 10;

server 2.2.2.2 weight 20;

}

After making above mentioned changes the server having IP address 2.2.2.2 will receive twice as many requests as the server with IP address 1.1.1.1 will accept.

PermalinkMethod 2 - Least connections -

In this method a request is sent to the server with the least number of active connections.

upstream loadbalancer{

least_conn;

server 1.1.1.1;

server 2.2.2.2;

}

PermalinkMethod 3 - IP Hash

Stateful applications rely on the fact that even in a highly clustered environment, the reverse proxy tries to send requests from a given client to the same server on each request-response cycle. IP Hash method is used to support sticky sessions.

upstream loadbalancer{

ip_hash;

server 1.1.1.1;

server 2.2.2.2;

}

PermalinkSome important points

PermalinkUsing a server as backup -

Imagine we want to use a server as a backup means when there is no server available then we have to redirect the request to that specific backup server then we need to add backup element in upstream block as follow -

upstream loadbalancer{

server 1.1.1.1;

server 2.2.2.2;

server 3.3.3.3 backup;

}

PermalinkDisabling server from accepting requests -

Imagine we want to disable a server from accepting requests for time being then we need to add down element in the upstream block as follow -

upstream loadbalancer{

server 1.1.1.1;

server 2.2.2.2;

server 3.3.3.3 down;

}

Thank you so much for your time